Stereo Imaging

CS 481 Lecture, Dr. Lawlor

The human visual system perceives 3D shape from a variety of sources:

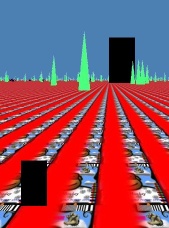

Size, occlusion, lighting, and texture cues work together

to help your brain estimate objects' size and distance. For

example, in this image both black doors are exactly the same size on the image, but

we perceive the door on the horizon as being much further away, and

hence bigger. The same illusion causes the moon to look way

bigger down on the horizon (where it's clearly behind the mountains,

and hence must be huge) than near-overhead (without any distance scale,

your brain isn't faced with the enormous size of the moon).

Size, occlusion, lighting, and texture cues work together

to help your brain estimate objects' size and distance. For

example, in this image both black doors are exactly the same size on the image, but

we perceive the door on the horizon as being much further away, and

hence bigger. The same illusion causes the moon to look way

bigger down on the horizon (where it's clearly behind the mountains,

and hence must be huge) than near-overhead (without any distance scale,

your brain isn't faced with the enormous size of the moon).

- Motion

parallax is probably the next strongest visual depth

cue--when you move your head sideways by a distance h, 3D objects at a

distance d move by h/d radians. Motion parallax falls off quickly

with distance. Faking motion parallax is what 3D graphics is all

about; but you've got to make sure the camera (or objects) can move

sideways to the camera. Some systems have a "wiggle" mode that

causes the camera to slide back and forth, to enhance motion

parallax. Head-tracking systems, that watch the user's head

location, can do a good job of simulation motion parallax.

Atmospheric

perspective is another strong distance cue at long ranges. It is

caused by sky-light scattering into your view, as your view cuts

through larger amounts of air, and eventually converges on sky

color. For example, the more distant hills and mountains in the

photo below begin to approach sky color. A mathematical model

might be that with every mile of distance, we blend in a little more

sky light (C(d+1)=0.9*C(d)+0.1*sky), which integrates to the usual

exponential model C(d)=pow(0.9,d)*C(0)+(1-pow(0.9,d))*sky. The

computer graphics equivalent of this effect is usually called "fog",

although in graphics fog is often absurdly thick. Real

atmospheric scattering tends to take tens of kilometers to

significantly affect colors, while often in games the end of a hallway

is often visibly foggier. Maybe game programmers work in really

smoky buildings, or live in foggy places like Seattle or London!

Atmospheric

perspective is another strong distance cue at long ranges. It is

caused by sky-light scattering into your view, as your view cuts

through larger amounts of air, and eventually converges on sky

color. For example, the more distant hills and mountains in the

photo below begin to approach sky color. A mathematical model

might be that with every mile of distance, we blend in a little more

sky light (C(d+1)=0.9*C(d)+0.1*sky), which integrates to the usual

exponential model C(d)=pow(0.9,d)*C(0)+(1-pow(0.9,d))*sky. The

computer graphics equivalent of this effect is usually called "fog",

although in graphics fog is often absurdly thick. Real

atmospheric scattering tends to take tens of kilometers to

significantly affect colors, while often in games the end of a hallway

is often visibly foggier. Maybe game programmers work in really

smoky buildings, or live in foggy places like Seattle or London!- Stereo

disparity is the difference between the image seen by both of your

eyes. Here's an experiment--try covering up one eye, holding your

head perfectly still, and staring at the world. It looks

flat! Stereo disparity is pretty tricky to add to rendering

systems, so of course people are fascinated by it!

Stereo Disparity

Your two eyes see the world slightly differently. Your brain

estimates depth from these differences. To simulate this effect,

you first need to render the world from the point of view of each

eye. This is easy. You then need to feed each eye-image

into the user's eyes independently. That's the hard part, but

there are lots of interesting ways to do it:

- You can show the images side-by-side, and let the user cross his eyes, or go wall-eye. Special hardware called a stereoscope

can help you do this, and dates back to the victorian era. I

shoot a lot of my digital photos in stereo, and then just view them

side by side.

- You can build two independent screens, and put one in front of each eye. This looks cool, but so far it's flopped in the marketplace (see Nintendo's 1995 "Virtual Boy").

- You can build one high-resolution screen, and put a sheet of tiny

lenses in front of the screen, so each eye sees a different part of the

screen through the lenses. This is called a lenticular display,

and has the advantage that you don't need to wear anything funky on

your head (it's "autostereoscopic"). The downside is that even

commercial systems currently have fairly pathetic resolution and high prices.

- You can use holography to capture the light from real-life or digital models.

Full holography requires maybe 50,000dpi, but you can do a decent job

with as few as 5,000dpi. MIT has built several realtime computational holographic output devices based on graphics cards.

- You can use a single screen, and show each eye's image in rapid succession. You can then use lightweight LCD shutterglasses

to black out the other eye, and the user's brain will fuse the images

together. Because the left-right flipping cuts the per-eye frame

refresh rate in half, it helps to start with a pretty high-refresh-rate

system (90+Hz works best). The upside is this is really quite

cheap (my eDimensional glasses

cost $40), and it works on any display with varying degrees of

success. I've gotten poor results on LCD displays and projectors

(they just can't switch between the left and right image fast enough,

resulting in ghosting), passable results on CRT monitors (there's still

a bit of ghosting, due to the phosphors staying lit), and truly

incredible results with some DLP projectors. DLP projectors are

designed to flash out their images to a passive screen, so there's zero

ghosting or blurring. The ARSC Discovery Lab uses shutterglasses too.

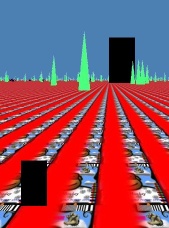

Size, occlusion, lighting, and texture cues work together

to help your brain estimate objects' size and distance. For

example, in this image both black doors are exactly the same size on the image, but

we perceive the door on the horizon as being much further away, and

hence bigger. The same illusion causes the moon to look way

bigger down on the horizon (where it's clearly behind the mountains,

and hence must be huge) than near-overhead (without any distance scale,

your brain isn't faced with the enormous size of the moon).

Size, occlusion, lighting, and texture cues work together

to help your brain estimate objects' size and distance. For

example, in this image both black doors are exactly the same size on the image, but

we perceive the door on the horizon as being much further away, and

hence bigger. The same illusion causes the moon to look way

bigger down on the horizon (where it's clearly behind the mountains,

and hence must be huge) than near-overhead (without any distance scale,

your brain isn't faced with the enormous size of the moon). Atmospheric

perspective is another strong distance cue at long ranges. It is

caused by sky-light scattering into your view, as your view cuts

through larger amounts of air, and eventually converges on sky

color. For example, the more distant hills and mountains in the

photo below begin to approach sky color. A mathematical model

might be that with every mile of distance, we blend in a little more

sky light (C(d+1)=0.9*C(d)+0.1*sky), which integrates to the usual

exponential model C(d)=pow(0.9,d)*C(0)+(1-pow(0.9,d))*sky. The

computer graphics equivalent of this effect is usually called "fog",

although in graphics fog is often absurdly thick. Real

atmospheric scattering tends to take tens of kilometers to

significantly affect colors, while often in games the end of a hallway

is often visibly foggier. Maybe game programmers work in really

smoky buildings, or live in foggy places like Seattle or London!

Atmospheric

perspective is another strong distance cue at long ranges. It is

caused by sky-light scattering into your view, as your view cuts

through larger amounts of air, and eventually converges on sky

color. For example, the more distant hills and mountains in the

photo below begin to approach sky color. A mathematical model

might be that with every mile of distance, we blend in a little more

sky light (C(d+1)=0.9*C(d)+0.1*sky), which integrates to the usual

exponential model C(d)=pow(0.9,d)*C(0)+(1-pow(0.9,d))*sky. The

computer graphics equivalent of this effect is usually called "fog",

although in graphics fog is often absurdly thick. Real

atmospheric scattering tends to take tens of kilometers to

significantly affect colors, while often in games the end of a hallway

is often visibly foggier. Maybe game programmers work in really

smoky buildings, or live in foggy places like Seattle or London!